学生总成绩报表

【hadoop|MapReduce实验——学生总成绩报表,学生平均成绩】

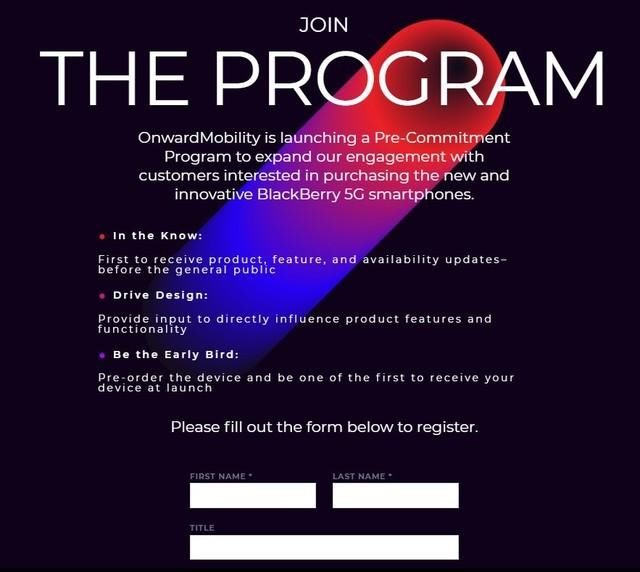

文章图片

Map类

package StudentScore_06;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

import java.util.StringTokenizer;

public class MyMap extends

Mapper {

@Override

protected void map(LongWritable key,Text value,Context context) throws IOException, InterruptedException {

//读取一行数据

String val = value.toString();

//把读取的数据以换行作为分隔符

StringTokenizer stringTokenizer = new StringTokenizer(val,"\n");

while (stringTokenizer.hasMoreElements()){

StringTokenizer tmp = new StringTokenizer(stringTokenizer.nextToken());

//对读取的一行的名称和成绩进行切分并写入到context对象中

String username = tmp.nextToken();

String score = tmp.nextToken();

context.write(new Text(username),new IntWritable(Integer.valueOf(score)));

}

}

} Reduce 类

package StudentScore_06;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

import java.util.Iterator;

public class MyReduce extends Reducer {

protected void reduce(Text key,Iterable values,Context context) throws IOException, InterruptedException {

//获取键值对集合 遍历对象

Iterator iterator = values.iterator();

int sum = 0;

//循环获取相同键的所有值并进行计算和

while(iterator.hasNext()){

int v = iterator.next().get();

sum+=v;

}

context.write(key,new IntWritable(sum));

}

}

Reduce类(求学生平均成绩)

package StudentAvgScore_07;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

import java.util.Iterator;

public class MyReduce extends Reducer {

protected void reduce(Text key,Iterable values,Context context) throws IOException, InterruptedException {

//获取键值对集合 遍历对象

Iterator iterator = values.iterator();

int count = 0;

int sum = 0;

//循环获取相同键的所有值并进行计算和

while(iterator.hasNext()){

int v = iterator.next().get();

sum+=v;

count++;

}

int avg = sum/count;

context.write(key,new IntWritable(avg));

}

} Job类

package StudentScore_06;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.io.Text;

import java.io.IOException;

public class TestJob {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

//1、获取作业对象

Job job = Job.getInstance(conf);

//2、设置主类

job.setJarByClass(TestJob.class);

//3、设置job参数

job.setMapperClass(MyMap.class);

job.setReducerClass(MyReduce.class);

//4 set map reduce output type

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//5、设置job输入输出

FileInputFormat.setInputPaths(job,new Path("file:///simple/source.txt"));

FileOutputFormat.setOutputPath(job,new Path("file:///simple/output"));

//6 commit job

System.out.println(job.waitForCompletion(true) ? 0 : 1);

;

}

}推荐阅读

- hadoop|MapReduce实验——计算整数的最大值最小值

- 离线数仓|Hive 3.1.2 数仓基本概念 大致分层

- hadoop|13、Hive数据仓库——结合shell脚本企业实战用法,定时调度

- 电商数仓|电商数据仓库系统

- hadoop|5、Hive数据仓库——Hive分区及动态分区

- hive|面试官(hive表有数据,但为什么impala查询不到数据())

- Hive|Hive-sql连续登陆问题

- 大数据|大数据计算框架与平台--深入浅出分析

- #|用户行为采集平台搭建